What if the apps on your phone knew where you were, what you were doing, what's nearby, and even what the weather was like outside, and then combined this information to react intelligently to your current situation? Would that be creepy or amazing? We will soon find out, it seems. At this week's Google I/O conference, the company introduced new tools for app developers that will allow them to create applications that customize themselves to a user's current context.

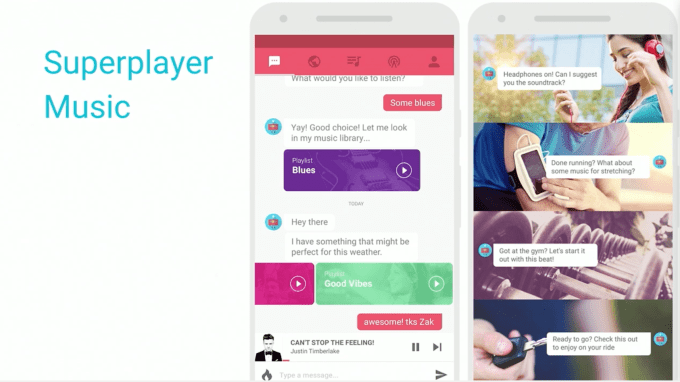

For example, a streaming music application could display an energetic playlist when you plug in your headphones and start jogging.

Or perhaps an app could alert you to stop by the pharmacy to pick up your medications – but it would only do so if you were driving, near the store, and the store was actually open.

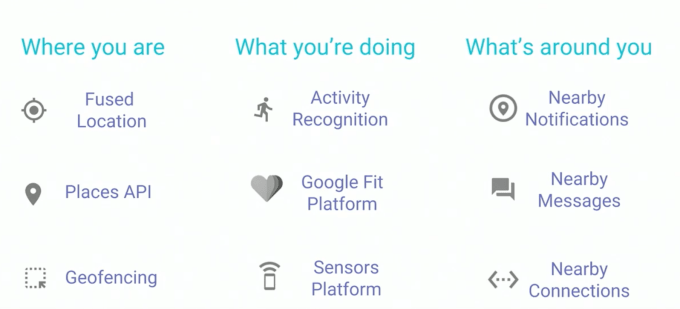

To make these sorts of smarter applications possible, Google is introducing a new "Awareness API" which will become available shortly after its I/O conference wraps.

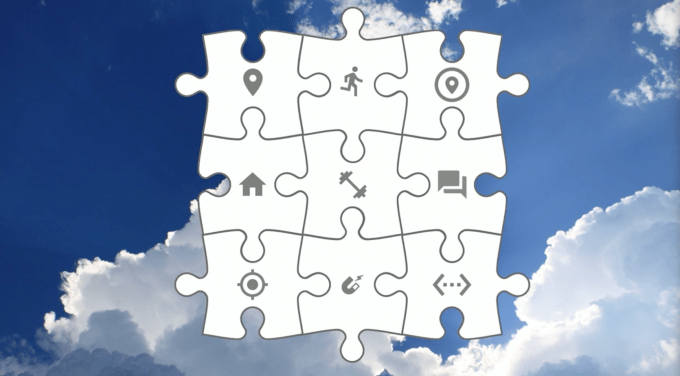

Effectively, this API combines a variety of functions previously available via other APIs – like gaining access to a user's location or recognizing that they're now driving their vehicle, for example. It can even sense nearby beacons and devices, which means it can tap into data from things like Android Wear smartwatches, or interoperate with devices like Chromecast (Google Cast) or Google's new smart speaker, Google Home.

Google suggested a number of use cases, besides the examples above, as to how this functionality could be implemented in future applications.

For instance, a smarter alarm clock app could decide when to wake you up based on how late you stayed up the night before, and when your first meeting is that day.

A weather application could sense the Chromecast plugged into your bedroom TV and project the day's weather onto the screen.

An assistant application could wake up Google Home to tell you that it's time to leave for your calendar appointment.

A running app could immediately log your run for you, even if you forgot to start the tracking function.

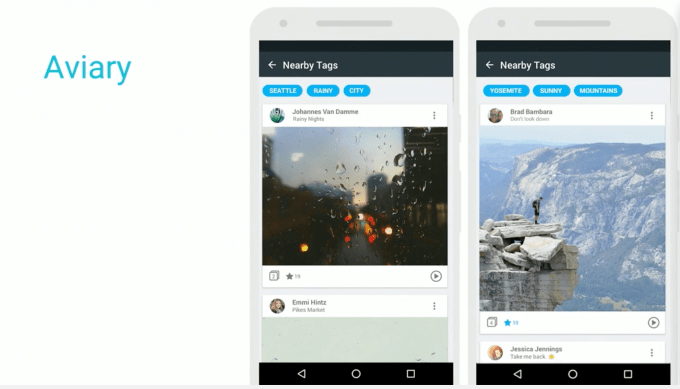

A launcher application could place your camera app front-and-center when you're outside because it knows you take a lot of nature photos outdoors. And, as an added bonus, when you snap the photo, it tags it with the weather and activity. This becomes part of the photo's metadata, allowing you to later search for "outdoor photos taken while running and it was sunny outside."

Some of these functions were possible before, if developers used multiple APIs. However, Google explained that calling upon multiple APIs, depending on conditions, can drain the battery or make the device run sluggishly due to increased RAM usage.

That could lead to users getting frustrated with the app and possibly even uninstalling it from their phone.

The new Awareness API, then, not only offers the convenience of requesting all this information about a user's situation more easily, it also does so while optimizing for system health. That means the apps get smarter without slowing the phone down or killing your battery.

The Awareness API actually contains two distinct APIs – one that lets apps react to the current situation (Fence API) and another to request information about the user's current context (Snapshot API).

While Google's suggested examples sound interesting, some of the real-world use cases implemented by partners who had early access to the API are a bit less inspiring.

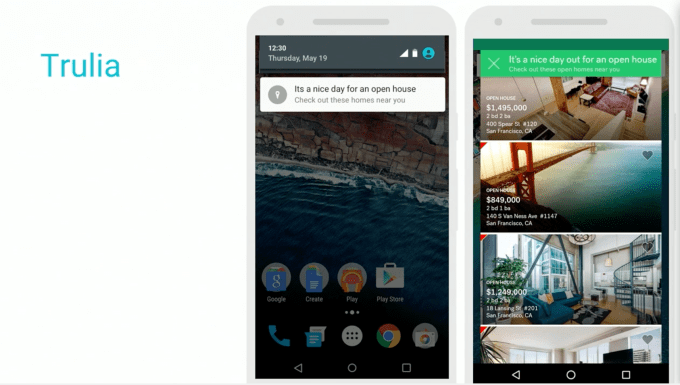

Real estate app Trulia, for instance, is using the API for smarter push notifications. That is, it will alert you to visit an open house only if you're nearby, walking, and it's nice outside.

Runkeeper will let you tag your running posts with the current weather.

GrubHub and Aviary are also tapping into the weather to personalize their apps.

Latin American music streaming app Superplayer Music, however, seems a little more fun. It will suggest music based on where you are and what you're doing. If you just got to the gym, it will suggest different music than if you're about to start a road trip, for example.

And Android launcher Nova is considering rewriting its app to be context aware – meaning it will show you the right apps at the right time.

Creating a contextually aware mobile experience is something other startups have attempted before, often in the form of a launcher, like Aviate's launcher that Yahoo acquired in 2014. But people have so far resisted having their phone's user interface overhauled in reaction to their surroundings.

That's why it makes sense for this situational awareness to become more deeply embedded in the apps themselves. Instead of asking users to readjust how they use their phone, the experience of using the phone just gets better as notifications get smarter and less bothersome, apps react to what you're doing, and interoperate with other devices around you more seamlessly.

Developers can sign up for early access here.

Source: Android apps can now react to your environment

No comments:

Post a Comment